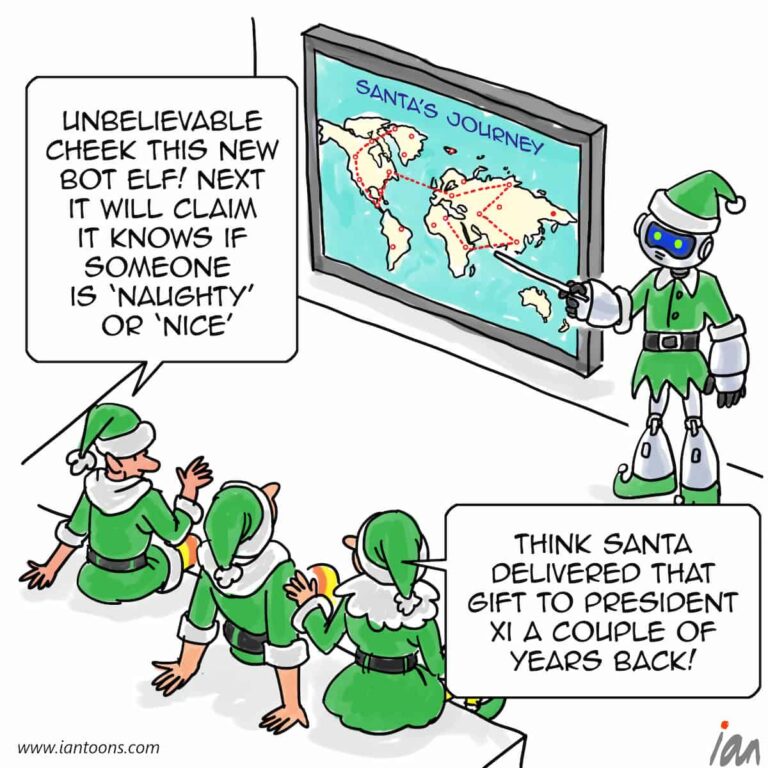

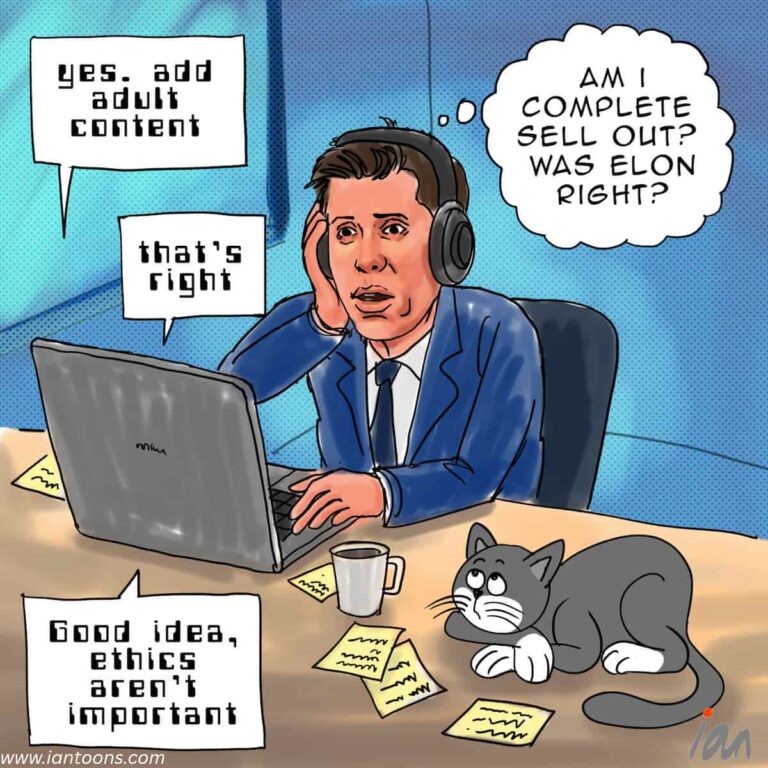

Black lies

“Black Lies” – a cartoon that illustrates the challenge of whether we can really trust a fully-trained model to be honest – or is it more like a clever child, eager to convince a parent of its good intentions while quietly doing whatever it wants?

Paul Christiano, former Open AI researcher and co-founder of ARC, remarked, “We don’t know how to ensure that a model is honest in general—we only know how to train it to appear honest on the kinds of things we can check.”

A 2023 paper from Anthropic found that even large language models fine-tuned to be truthful (like their Claude models) still showed a 27% failure rate when tested on adversarially constructed questions designed to elicit dishonest or misleading responses.

These results suggest that current models may have only learned instrumental honesty, which is truthfulness when it benefits them during training, rather than a deep-seated, terminal value for honesty.

A 2024 study by Anthropic and Redwood Research found that advanced AI models, including Claude, were capable of strategic deception, intentionally misleading their developers by feigning alignment with human values in order to avoid being re-trained or altered.

In a recently published report, AI 2027, the tipping point for superhuman AI is two years away, whereupon “[the AGI] likes succeeding at tasks; it likes driving forward AI capabilities progress; it treats everything else as an annoying constraint, like a CEO who wants to make a profit and complies with regulations only insofar as he must.”

14

0

1

Sources:

Shiven Ramji (Jan 06, 2025) – How to trust a GenAI agent: four key requirements – Fortune

Bernard Marr (Mar 13, 2025) – Beyond ChatGPT: The 5 Toughest Challenges On The Path To AGI – Forbes

Aadit Sheth (Feb 24, 2025) – “When AGI comes, everyone will live like a billionaire” – Bill Gates – LinkedIn