Free Climbing

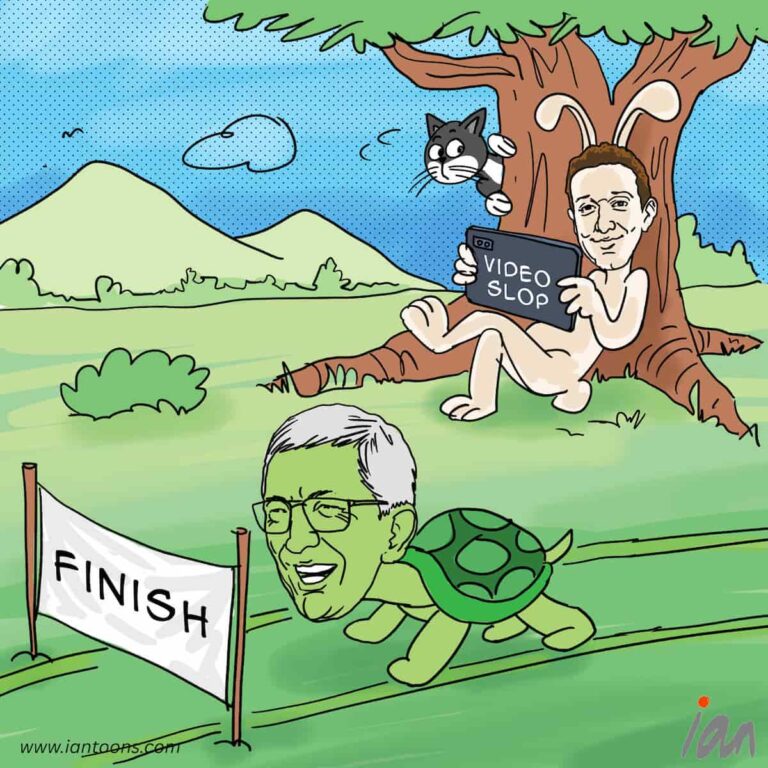

“Free Climbing” – A cartoon that illustrates the explosive speed of AI infrastructure build-out and the unprecedented resources it demands.

In 2024, Elon Musk’s Colossus supercluster was built in Tennessee, USA in just 122 days end-to-end. Remarkably, the time from first rack to training took only 19 days, compared to the typical years-long timeline. Why the urgency? Training today’s frontier AI models now requires tens of thousands of GPUs running nonstop for months. For example, GPT‑4 alone reportedly used over 25,000 A100 chips, with future models demanding even more.

That’s why Colossus 2 is now underway, targeting a staggering 50 million GPU‑equivalents to train up Elon’s flagship AI model called Grok – a leap akin to moving from medieval blacksmithing to the Industrial Revolution, overnight. The energy footprint is just as dramatic. Powering Colossus 2 could match or exceed New York City’s electricity use, drawing 4–5 gigawatts, even under ideal efficiency.

Meanwhile, Sam Altman’s Stargate venture is rising fast to power up ChatGPT inference and training, beginning in the U.S. Midwest, with expansions planned in Norway, UAE, and beyond. Backed by $100 billion in initial capital, Stargate is projected to exceed $500 billion in total buildout by 2029. China and Europe are racing too, rolling out sovereign-scale AI compute hubs under their national programs.

And this is just the beginning. As Emad Mostaque, founder of Stability AI, put it, “we’re in a compute arms race. Whoever has the most compute will win.”

13

1

1

Sources:

Jennifer Ortakales Dawkins (Jul 14, 2025) – Elon Musk is raising billions more for his money-draining xAI startup – QZ

Amanda Gerut (Mar 14, 2025) – Super Micro CEO Charles Liang said he teamed up with Elon Musk’s xAI to build the Colossus data center in just 122 days – Fortune

Rashi Shrivastava (Jul 23, 2024) -The Prompt: Elon Musk’s ‘Gigafactory Of Compute’ Is Running In Memphis – Forbes