Hallucinations

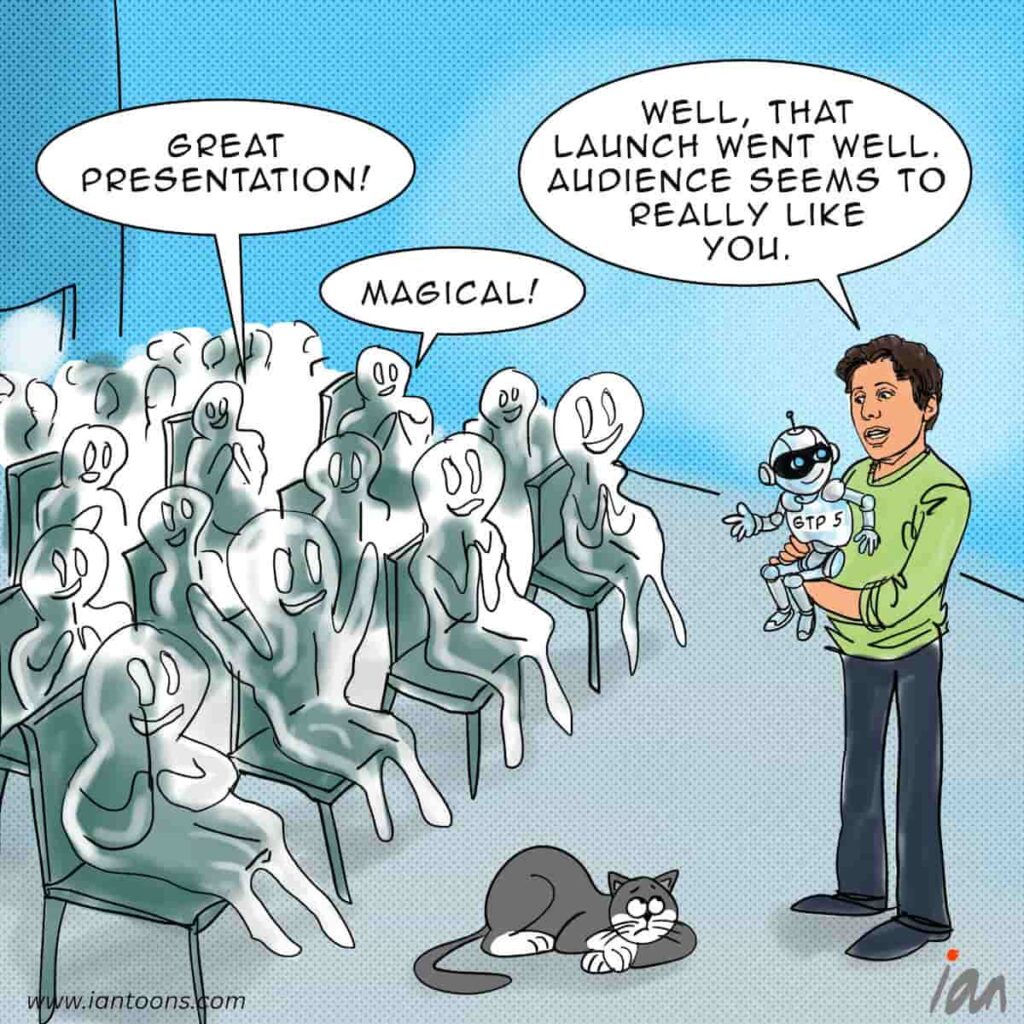

Hallucinations – A cartoon that illustrates how even the latest AI models are still suffering from significant hallucinations, keeping AGI some way off.

Hallucinations happen when an AI delivers false information with absolute confidence. More compute power and bigger models help with accuracy, but they cannot fully solve the root problem. Large language models predict the most probable next word rather than verify truth. Therefore, with gaps in training data, ambiguous prompts and a lack of grounding means errors still slip through.

For consumers, this is not just a technical curiosity. It is a trust issue. For example, an AI travel assistant recently invented refund rules, costing a passenger their claim. Even at launch, GPT-5’s demo included a fabricated chart, prompting Sam Altman to publicly apologise for the “mega chart screw-up.” This was a stark reminder that even “most safe” models can mislead.

Some companies are trying to close the gap. Perplexity.ai grounds answers in real-time citations, while LangChain’s links responses to curated knowledge bases. These guardrails are promising, but until reliability is proven in everyday use, AGI will remain a distant target.

As Mark Twain put it, “It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.”

17

2

1

Sources:

Conor Murray (May 06, 2025) – Why AI ‘Hallucinations’ Are Worse Than Ever – Forbes

Dave Smith (Dec 24, 2024) – Scientist says the one thing everyone hates about AI is ultimately what helped him win a Nobel Prize – Fortune

Debrabata Pruseth – In Praise of AI Hallucinations: How Creativity Emerges from Uncertainty – Debrabata Pruseth