Testing Times

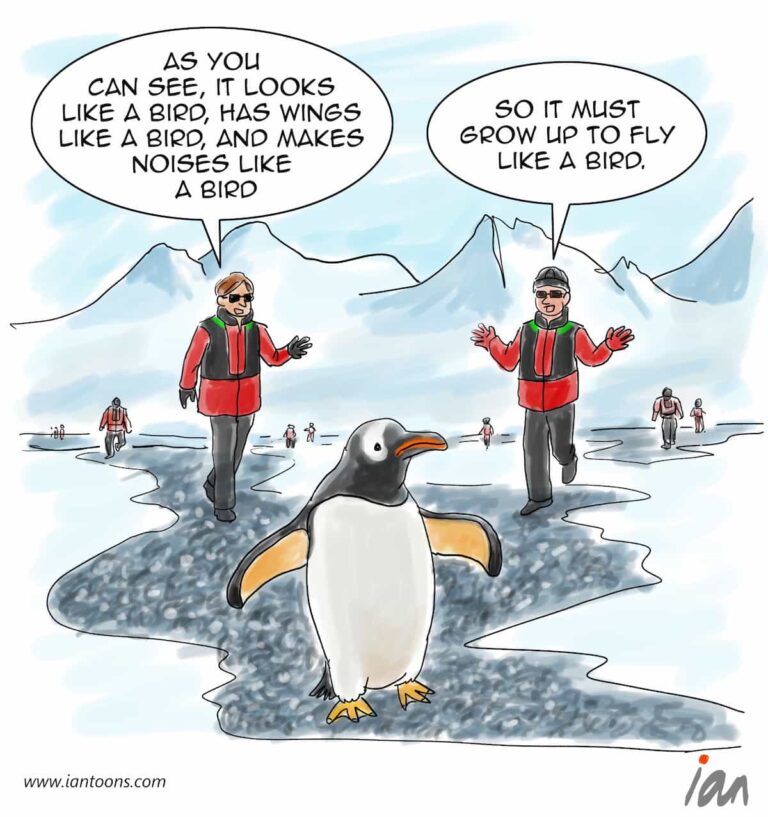

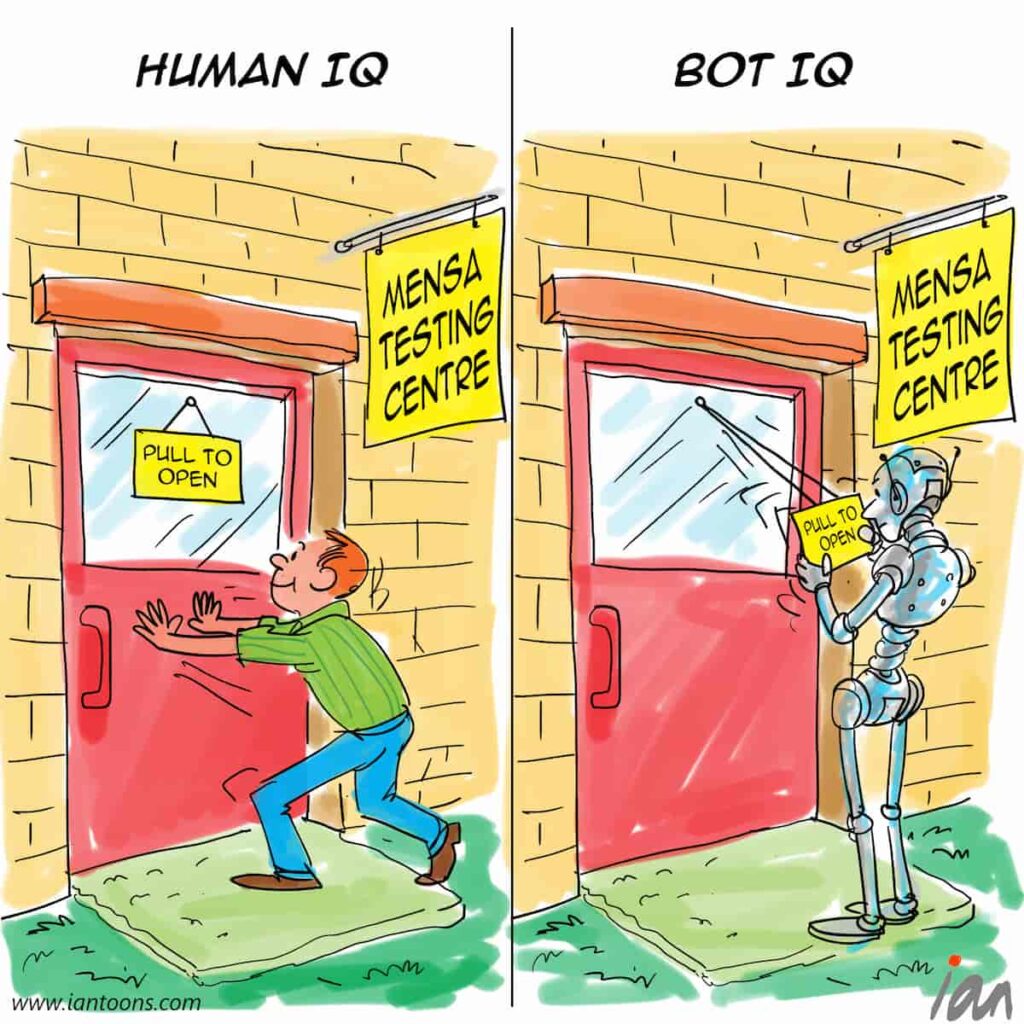

“Testing Times” – a cartoon which illustrates that despite AI model’s intellectual complexity they are still making mistakes as humans do, just different types of ‘dumb’ mistakes.

The IQ test was invented in 1905 to assess a range of cognitive skills, including reasoning, problem-solving, and understanding complex ideas. With the rollout of AI models, researchers are trying to find where this new technology fits on the ranking of human average IQ.

One such study by Maxim Lott found that we are now getting language models to achieve over 100 IQ points, which is considered average human intellectual abilities.

He found that some models performed better than others, for example Anthropic’s Claude 3.0 did better than most other models, and he predicts that in another 4-10 years (with the launch of Claude-6), the model should get all the IQ questions right, and “be smarter than just about everyone.”

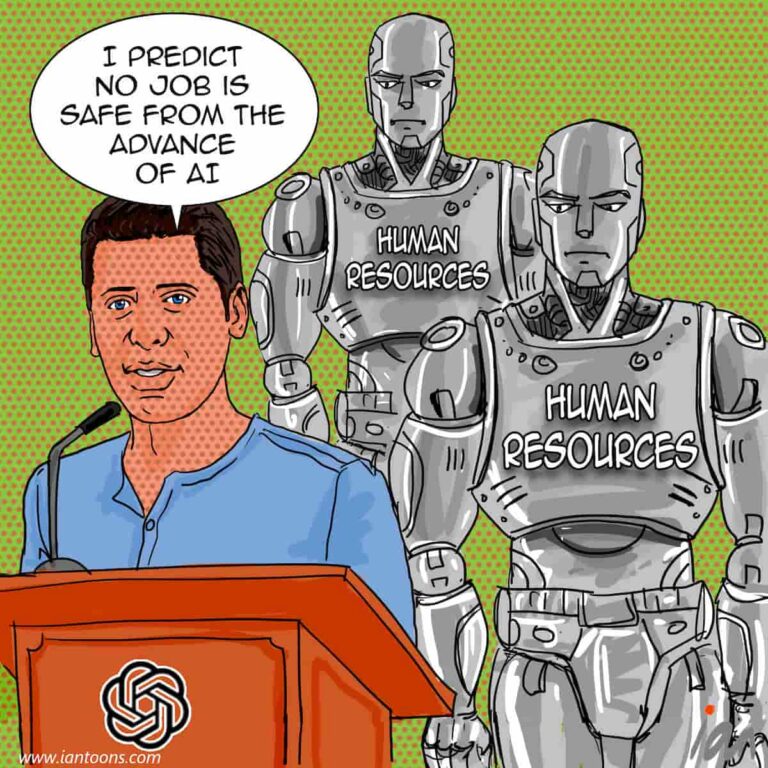

The team at Open AI have started to think about all of this and last week announced a series of levels that could help translate this intelligence into real world applications. Level 1, where we are today with ChatGPT 4.0, is the world of chatbots where there is conversational intelligence.

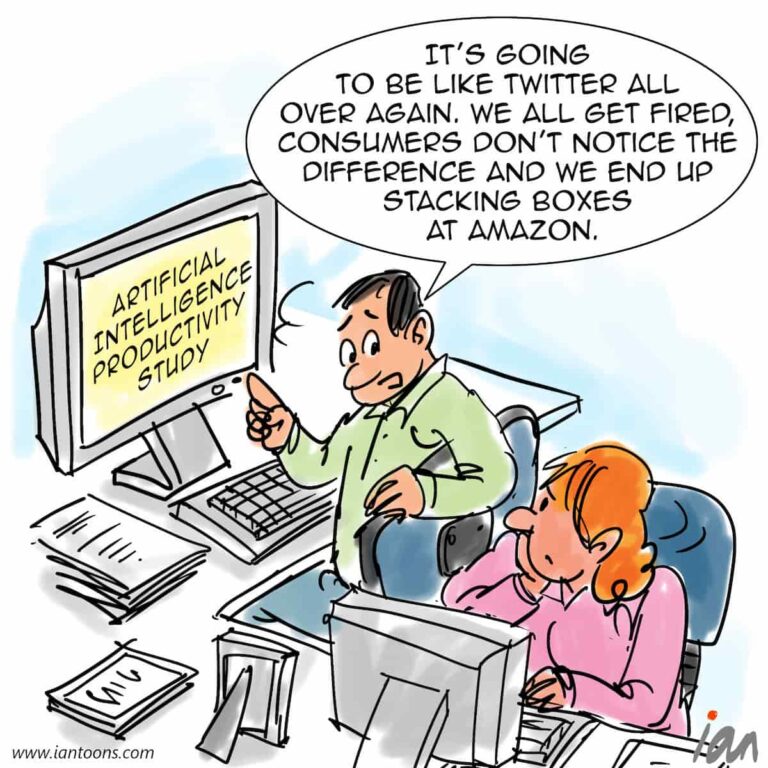

Moving up the levels, we go to models that are ‘Reasoners,” then to “Agents,” “Innovators” and finally Level 5 is the bots self-managing as standalone “Organizations.” Some companies are already working on level 3+ models that can perform multi-step tasks on behalf of humans, like booking an entire vacation or working through a complicated coding problem on its own.

However, as Andrew Ng, a prominent AI researcher and co-founder of Google Brain, stated “AI is incredibly powerful, but it still lacks a deep understanding of context that humans possess naturally.“

32

0

2