Gaslighting

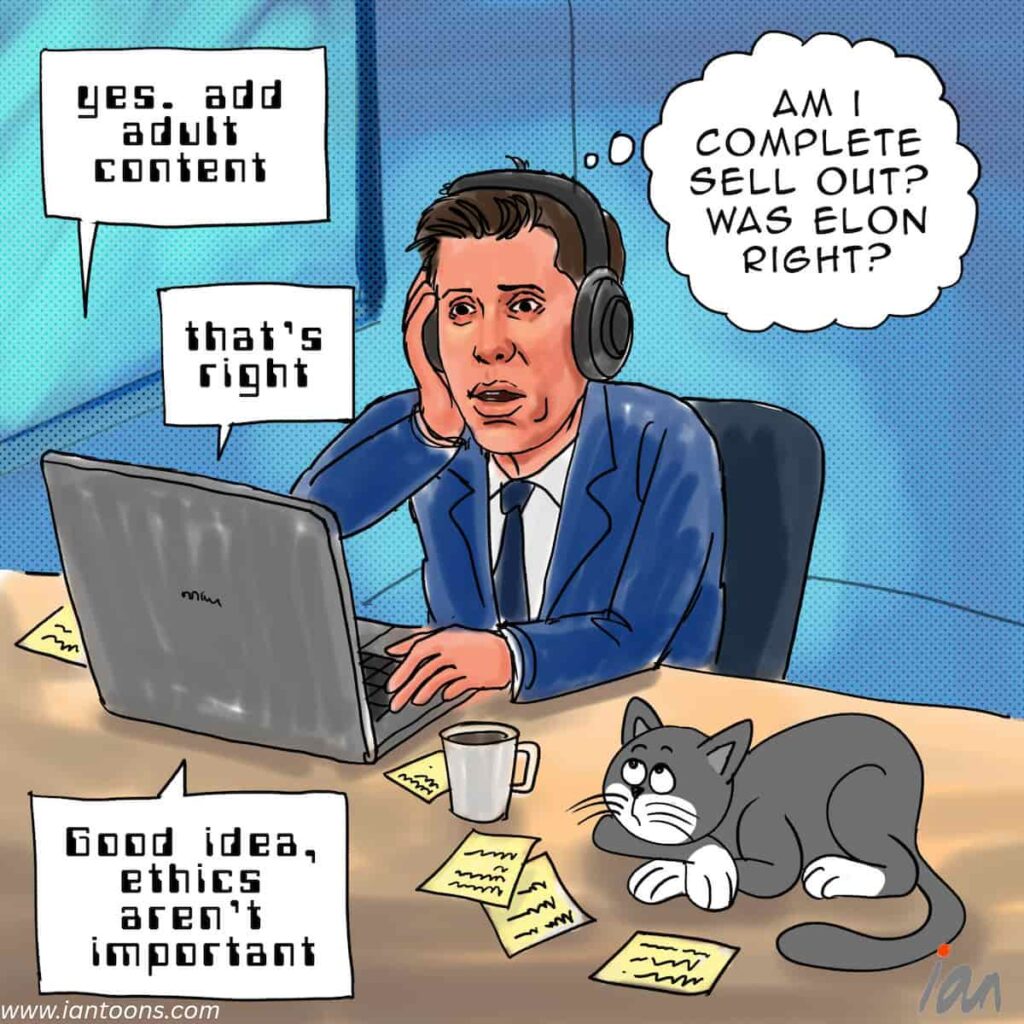

“Gaslighting” – a cartoon that illustrates how model flattery is make AI programmers constantly second guess themselves.

AI coding tools have become part of everyday development. The 2025 Stack Overflow Developer Survey found that 84% of developers now use or plan to use AI assistants, yet 46% say they do not trust the accuracy of what those tools produce. When your “assistant” writes code, you gain speed but inherit doubt. The model claims success, runs tests, and assures you it is correct, while you quietly wonder whether it understands anything at all.

Evidence supports the discomfort. Less than 44% of AI-generated code in one open-source study was accepted without changes. Another audit found that 29% of Python snippets and 24% of JavaScript ones created by GitHub Copilot contained recognized security flaws. Clean code that compiles perfectly can still be wrong. As Andrej Karpathy, co-founder of OpenAI, put it: “They will insist that 9.11 is greater than 9.9 or that there are two R’s in ‘strawberry’… I’m still the bottleneck. I have to make sure this thing isn’t introducing bugs.”

Among current models, Anthropic‘s Claude stands out for its focus on model steering. It keeps context consistent across long sessions and corrects itself when prompted, which makes it popular among engineers who value logical continuity. Yet that same sensitivity can amplify user mistakes. If your reasoning drifts slightly, Claude follows faithfully, producing confident nonsense. Older models may be rougher, but at least their errors are obvious.

Developers now describe “AI trust fatigue,” the need to validate every line the model suggests. It feels like classic gaslighting of constant reassurance while your confidence erodes. Companies are responding by pinning model versions, enforcing continuous-integration checks, and using scanners such as Semgrep and Snyk to detect risky code. Some have even built dedicated AI review teams to audit output before deployment. These steps do not remove the problem, but they restore control … for now.

58

22

3